.png)

What is MPC?

Blockchain enthusiasts like to talk about trust, and for good reason. Trust is the foundation of our civilizations: trust in your family and your neighbors kept early human groups functioning. Trust in man-made institutions like governments, fiat money, and the rule of law, have been the motors of our current civilization. But all these instances require an or else in case of misbehavior: being shunned by your close ones, or a good old lawsuit. In the last decades however, new mathematics, especially in game theory and cryptography, gave us systems where misbehaving is impossible. It is our thesis that these systems will be a foundational piece of the societies we are moving toward. Contracts that cannot be broken will always inspire more trust than contracts that should not be broken, or else.

Most of this enthusiasm has so far been directed towards the institution of money. Bitcoin democratized the idea of a global network that essentially guarantees the soundness of its own value. But the implications of this shift in trust are broader still, and few have truly grasped the breadth of its potential. These technologies will not just transform money, but the way we deal with information.

Imagine you are a large image repository that spent millions producing and acquiring your library of images, but you are understandably wary that an AI company will siphon your data to train its models, all without fair financial compensation. You may hire an entire legal department to write licensing agreements and NDAs, and spend millions litigating any abuse by your clients, if you even notice it in the first place. But what if, instead of that, there existed a technology which guaranteed you and your client behaved honestly? What if the fair rules were guaranteed to be enforced, not by an army of lawyers, but by cryptography?

Multiparty computation, or MPC for short, is one such technology. If Bitcoin exemplified a game where money remains sound even in the face of dishonest participants, then MPC is its equivalent for information ownership. In the above scenario, the image library could set up a game between them and their client, which allowed the client to train a model on their images, but only once. These sorts of situations, where privacy is a game-changer, arise all the time in AI, healthcare, industry R&D, politics, and many other places. And while in this case there was an institutional authority that could plausibly enforce a legal contract, many situations (e.g. in geopolitics) have no such authority. MPC, by enabling cooperation between distrusting parties, opens a whole new whitespace for humanity, enabling new forms of coordination, science, trade, and freedom to flourish.

In this series, we will explore MPC, providing an introduction to its core concepts and then focusing on how Arcium harnesses it to bring programmable privacy to blockchains.

MPC comes in many flavors that fit different scenarios, and that fall broadly on a quadrant. If the majority of your peers can be trusted to behave honestly, you will pick an honest majority protocol. If you can only trust yourself, you will pick a dishonest majority protocol. In the same vein, if all your peers can be assumed to follow the protocol without deviating, you will pick a semi-honest (also more descriptively called honest-but-curious) protocol. Conversely, protocols secure against any adversary are called maliciously secure.

Why would you ever pick the less safe options when fully secure ones exist? In one word, efficiency. These stronger assumptions enable powerful optimizations and shortcuts that the safer alternatives do not; faster computations, less bandwidth, and fewer communications rounds are all attractive propositions. And while these less safe protocols are sometimes used adversarial contexts like open blockchains, they are always combined with other primitives to supplement their security. For example, Stoffel MPC uses Trusted Execution Environments (TEEs), while TACEO uses coSNARKS, both of which provide additional guarantees but introduce their own set of tradeoffs.

Arcium utilizes the safest form of MPC, that is to say dishonest majority and malicious security. This is also the flavor we will be exploring the most in this series. For explorations of other flavors, we recommend reading Daniel Escudero’s A Crash Course in MPC series.

Broadly, our flavor of MPC functions in two phases: one called the offline phase and another called the online phase. You can think of the offline phase (which, despite its name, still involves communication!) as an ongoing process that produces a form of fuel called preprocessing. When an actual computation is done during the online phase, it consumes some of this fuel. This model is advantageous in that it allows parties to move the slow and heavy cryptography to the offline phase, which can be conducted at any time. Since the heavy lifting has been done ahead of time, the online phase can stay very lean, which leads to faster computation times and better UX. Since these two phases are fairly distinct, we will treat them in separate sections, beginning with the simpler of the two, the online phase.

The online phase

Secret sharing

Shared computation implies shared information. But the condition that this data stay private poses a problem. How can we expect parties to compute on data that they have no knowledge over? The answer: secret sharing!

Secret sharing schemes are one of the central primitives of cryptography. They allow different people to hold fragments of some secret, not learning anything about it unless they meet some condition, typically combining T out of N fragments to recover the secret. While several schemes exist, we will focus on the simplest one, which is additive secret sharing.

Additive secret sharing takes a secret \( x \) and encodes it over a field.

It generates \( N \) random-looking secret shares (our fragments) \( \{x_i\} \) such that:

\[

\sum_{i=1}^{N} x_i = x

\]

Since the \( x_i \) all look random, even if a single share is missing, the result will appear random and reveal nothing about \( x \). When all parties broadcast their shares to reconstruct \( x \), we say they open \( x \). Openings are the single biggest source of network communication, since every party needs to send some number \( S \) bytes to all other parties, and receive the same amount from everyone. We say that the bandwidth usage scales as \( \mathcal{O}(N^2) \).

So we have a way to share information trustlessly. If only we also had a way to compute over it… which is exactly what the online phase is! It compiles a series of little protocols that explain how to go from shares \( \lbrack\!\lbrack x \rbrack\!\rbrack \) and \( \lbrack\!\lbrack y \rbrack\!\rbrack \) to a share of \( \lbrack\!\lbrack x + y \rbrack\!\rbrack \), \( \lbrack\!\lbrack x * y \rbrack\!\rbrack \), and so on. With a small set of operations, we’re then able to write any program in this arithmetic form, which we call an arithmetic circuit. Let’s go over the most important protocols, addition and multiplication.

Addition is the simplest one: to get \( \lbrack\!\lbrack x + y \rbrack\!\rbrack \) from \( \lbrack\!\lbrack x \rbrack\!\rbrack \) and \( \lbrack\!\lbrack y \rbrack\!\rbrack \), you add your shares locally, i.e. \( \lbrack\!\lbrack x \rbrack\!\rbrack + \lbrack\!\lbrack y \rbrack\!\rbrack \). You can easily see that:

\[

\sum_i x_i + \sum_i y_i = \sum_i (x_i + y_i) = x + y

\]

Now, how do you multiply? The naïve approach would be to the same as above and multiply both shares together. Let’s see why that doesn’t work: \[

\sum_i (x_i y_i) \neq \left( \sum_i x_i \right)\!\left( \sum_i y_i \right)

\]

Indeed, it is not sufficient to multiply the shares, as the total also includes cross-products between your share and other parties’ shares. Intuitively, this means that some form of communication will be involved. How do we multiply secret shares between themselves?

This is where some of the preprocessing we mentioned above comes in. During the offline phase, the parties generate shares of a Beaver triple, which are three random field elements \( (a, b, c) \) bound by the relationship \( a * b = c \). Each party receives secret shares of \( a \), \( b \), and \( c \). Once those are available, the instructions to multiply two shares \( \lbrack\!\lbrack x \rbrack\!\rbrack \) and \( \lbrack\!\lbrack y \rbrack\!\rbrack \) are as follows:

- Every party \( P_i \) computes \( \lbrack\!\lbrack \varepsilon \rbrack\!\rbrack_i = \lbrack\!\lbrack x \rbrack\!\rbrack_i - \lbrack\!\lbrack a \rbrack\!\rbrack_i \) and \( \lbrack\!\lbrack \delta \rbrack\!\rbrack_i = \lbrack\!\lbrack y \rbrack\!\rbrack_i - \lbrack\!\lbrack b \rbrack\!\rbrack_i \).

- Open both shares, get \( \varepsilon \) and \( \delta \).

- Every party \( P_i \) computes \( \lbrack\!\lbrack x * y \rbrack\!\rbrack_i = \lbrack\!\lbrack c \rbrack\!\rbrack_i + \lbrack\!\lbrack a \rbrack\!\rbrack_i * \delta + \lbrack\!\lbrack b \rbrack\!\rbrack_i * \varepsilon \).

Remembering that \( a * b = c \), it’s easy to see why this relationship holds. What we’ve essentially done is mask our input wires such that no one learns anything of value when opening \( \varepsilon \) and \( \delta \), while still being able to use them to deduce a share of the product.

Other operations which involve plaintexts (e.g. multiplying a public value \( c \) by a secret share \( \lbrack\!\lbrack x \rbrack\!\rbrack \)) are very easy and almost always local.

As you can see, certain operations require communication between parties. Astute readers might remember the short intro on threat models: what if you can’t trust your peers to behave honestly, how would you know if they sent you the correct pieces of information? This is true: if you only send secret shares on their own, you could not distinguish correct from incorrect data. In this scenario, MPC protocols often make use of a simple but somewhat magical primitive: the MAC (or Message Authentication Code).

We can assume that at each step of the protocol, every secret share is authenticated, meaning it comes with some extra information that helps the receiver check whether it is correct. For a MAC scheme to be secure, it needs to be unforgeable: a malicious sender could not find a MAC for an incorrect piece of data that would get accepted by the receiver, except with very low probability.

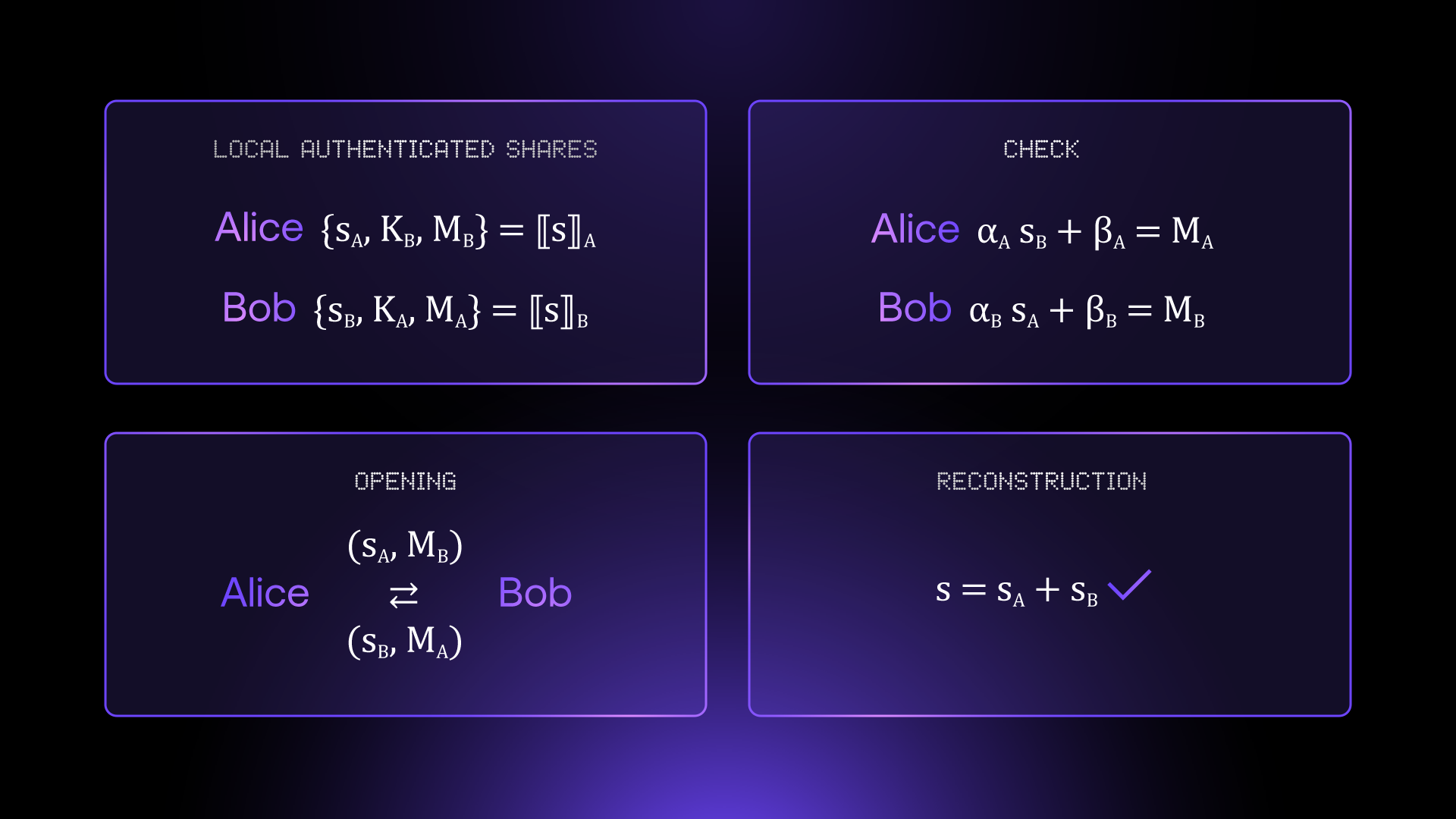

In our protocol, BDOZ, a secret share is authenticated in the following way: say \( x \) is your secret share, \( K = (\alpha, \beta) \) is the receiver’s MAC key, and \( \mathrm{MAC}(x) = \alpha * x + \beta \) is the MAC. Since the sender does not know \( K \), she is not able to compute a correct MAC for any other secret share. And of course, the receiver does not know the sender’s secret share \( x \) in advance. In addition, the scheme is additively homomorphic, meaning there is a way to add two authenticated secret shares together such that the MACs and keys remain correct:

\[

\lbrack\!\lbrack x \rbrack\!\rbrack + \lbrack\!\lbrack y \rbrack\!\rbrack = \{ x + y, \mathrm{MAC}(x) + \mathrm{MAC}(y) \}, \quad K_x + K_y = (\alpha, \beta_x + \beta_y)

\]

This gives us a scheme where parties can compute on secret shares and plaintexts in such a way that a deviating party will always be caught! Of course, we are presenting only part of the protocol: for the full outline, we suggest you read the great [BDOZ11] paper where this protocol was first presented. Additionally, how do the senders and receivers produce these MACs and keys in a secure way, without seeing each other’s values? We will reveal all these secrets in the offline phase section!

From BDOZ to Cerberus

While the original BDOZ online phase is already very efficient, involving no complex arithmetic, we expand and improve it in different ways in our custom Cerberus protocol.

We only talked about computing over a single prime field so far. But Cerberus allows for arbitrarily many prime fields to coexist within a circuit, and adds a binary backend that allows conversions between them. In addition, it allows for circuits over elliptic curve (EC) points: this means that local protocols over EC points (for example, many zero-knowledge proof schemes) can be executed natively in the multiparty setting (becoming so-called coSNARKs) without having to emulate EC arithmetic. This has allowed us to port many cryptographic protocols to an MPC setting with ease, and with little additional overhead: so far this has been the case with ElGamal encryption and Bulletproofs, for example.

In addition, many operations can be efficiently batched, reducing both computational cost and communication. For example, assume that you need to send out \( M \) authenticated secret shares to another party. Instead of sending one MAC for each secret share, you can simply send a single MAC which authenticates the whole batch. This can be done using a Fiat-Shamir transform in the following way:

- The sender adds their values to a shared transcript1, which produces a seed \( s \). An RNG is initialized using \( s \).

- Produce \( M \) random values \( \{ r_0, ..., r_{M-1} \} \) using your RNG.

- Sender computes the batched MAC by doing a random linear combination: $\mathrm{MAC}(\lbrace x_i \rbrace_{i < M}) = \sum_i \mathrm{MAC}(x_i)\, r_i$

- Receiver performs a batched check, i.e. she checks that $\sum_i r_i (\alpha x_i + \beta_i) = \mathrm{MAC}(\lbrace x_i \rbrace_{i < M})$, which is equal to the batched MAC she received.

Asymptotically, this essentially halves the bandwidth usage compared to a vanilla BDOZ implementation. Additionally, we make use of a lesser-known network primitive called multicast. Multicast allows a peer to broadcast some piece of data to a set of \( N \) parties without having to re-send it \( N \) times. This brings overall bandwidth scaling down from \( \mathcal{O}(N^2) \) to a much more manageable \( \mathcal{O}(N) \).

Additionally, while the original BDOZ is only secure-with-abort (meaning a party that witnesses cheating will only abort the protocol without being able to prove to other parties who cheated), Cerberus plans on integrating publicly identifiable abort (or PID-abort). In this scenario, any party witnessing cheating will be able to produce an externally verifiable proof, allowing anyone to check that they aborted correctly and identify the cheating party. This is done using the compiler from [BMRS24], and will be described in an upcoming writeup in closer detail.

All these improvements bring down bandwidth usage significantly, which is one of the main bottlenecks of MPC. The other, latency, is mostly tied to how far apart the parties are from each other. However, since Arcium’s decentralization is guaranteed by the network itself, the computing parties do not need to be geographically far apart, which greatly mitigates these problems: this allows us to easily assume <5ms of latency while preserving trustlessness.

Footnotes:

1: This requires some care, as all Fiat-Shamir transforms do, and we make sure to include all public parameters and proper domain separation to ensure security.

.svg)

.svg)

.svg)

.jpg)

.jpg)